Big Data Analysis

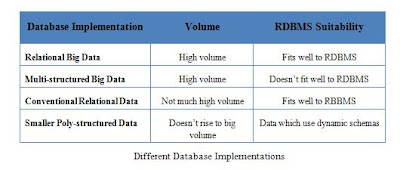

We can simply describe the word ‘Big Data’ as the datasets that are difficult to handle and work with. We can reduce the depth of the meaning by categorizing it according to its properties. Mainly big data can be reduced to two dimensions as Bigness and Structure of the data. This can be further categorized as follows. [reference]

According

to the above structure in our discussion on Big Data on RDMS we can categorize

different aspects of this topic according to the properties of data set. We can

simplify different uses of database implementation as follows,

Relational Databases on Big Data

When scaling relational database system to a

relational big data system the system designer needs to address many aspects

since traditional relational database systems are built to run on a single

machine and tries to accomplish all queries that it receives. When scaling these systems sometimes the read

and write throughput of the system becomes too much to handle for the system.

This is mainly because relational database systems were not designed for

distributed or shared systems and the additional overheads incur during these

processed would trouble the normal operation of the system. The scaling

techniques used on such a system would cost significant complexity and loss of

fault tolerance.

Relational

Data Model- Handle high incoming request load in system

In a relational database system we can use batch write the

incoming requests to increase the performance of the system. But if consider a system which handle 'Big Data' scale requests this much scaled

system cannot be addressed using a batch writes and reading.

- Creating database copies

When scaling up the database different copies of the database

need to be maintaining to increase the availability of the data. If not when

some system or network failure happens the database will be inconsistent or

unavailable. Not only this is a manual and multi-step process in relational

databases but also it creates more problems when new nodes are added to the

system. These kind of bottle-necks are not suitable on system which handle huge amount of data since in-case if we need to add new nodes to increase the performance it must be

less trouble and cost effective.

- Complexity when changing the database scheme

In relational database systems when we need to change the

database or one of its tables this schema migration need to handle manually on

each and every shared node and updating a schema can be more time consuming and

painful. So if we implement

our system on Relational databases it will be more trouble during operations

and maintaining the system.

- Fault tolerance

In

a parallel database system there is a high probability about one node might

going down due to disk filling up, damaging or due to breakdown of a node. Even

when the amount of system share increases the system will be less

fault-tolerant.

Multi-structured Model on Big Data

One such example for this kind of database

implementation would be a system which stores log files. These systems can be

highly scalable, analytical and cost effective. When implementing these use

cases NoSQL comes to action in most of the cases because of its properties

which will be discussed in latter part of this report. As rapid evolution of

Web service technologies which grow on exponential manner challenged the traditional

theories and approaches of database systems. Due to this reason most of the

researches done on multi-structured big data and its data management were

conducted by large web companies like Google, Amazon, Facebook etc. since they

were the initial party who faced these requirements on their systems before

most of the industry driven applications adopt the technology.

No-SQL

Data Model

- Handle high incoming request load in system

The database systems designed for big data conceptually address this

issue and it will be hidden form the user and the application.

- Creating database copies

Maintaining different copies of databases manually become complex task

for the system itself and for the designers of that system. In database systems

designed for big data parallelism of the system will be hidden form the

application and the system will be more user friendly to the system designers.

- Complexity when changing the database scheme

No-SQL database systems which usually handle big data basses

will usually keep and maintain some amount of metadata and row data about the

system. So during a change in the system they use this metadata knowledge

during modification rather than just aggregating from the data itself. So this

kind of problems will not be occurring on the system.

- Fault tolerance